The Data Accessibility Checklist

The last trend I heard from the BI crowd was this concept of Data Portability. It came out around the time of GDPR and was all about individuals’ right to own - and move - their own data into whatever silo(s) they wanted.

Data Portability was focused primarily on personal data - name, email, address etc - and this was a good place to start because no matter what platform you are offering, or what domain you are operating in, personal data is generally the same. This sameness makes it a good candidate for portability as the content is more or less unchanged between platforms, even if the structure differs. So, the DP community tried to come up with a common structure for describing this subset of data - allowing the transfer to be seamless between any two types of (conforming) platforms.

But that is not what I want to talk about. I want to throw my hat in the ring and try to predict the next phase in big data - Data Accessibility.

Unlike portability, which requires that data is re-structured as part of the transfer, data accessibility concentrates only on one’s ability to retrieve data from a certain platform. It is not concerned with what is done to that data later. Store it in CSV, dump it in a data lake, or feed it to your cat - I don’t care. I’m simply interested in your ability to get the data in the first place.

To clarify - while Data Accessibility doesn’t care what you do with the data, it is extremely concerned that you can do whatever you want. More on this below.

Why should you care about data accessibility

Everybody’s data these days is stored in one or more cloud platforms. It’s your data, but you have almost no control over it:

if you cancel your subscription, you’ve lost your data

if you want to do some reporting on your data, you can’t because it is accessible only via the cloud app (except for APIs - we’ll get to that below…obviously)

if you or your staff change or break some data, you have no way to revert it unless the cloud app specifically supports that (which is very rare)

Now, all of this is regrettable, however as an app developer myself I want to make clear that I am certainly not having a go at cloud service providers. Because my newly coined Data Accessibility phrase has not caught on (yet), these requirements are simply not front-of-mind for potential customers and therefore not worth an investment by the cloud platforms. Furthermore, quite frankly, it’s expensive to build these features into a cloud platform - especially when you are starting out.

Regarding the first point - customer awareness - this is regrettable but I believe optimistically that it will change over time as people cotton on to the fact that their “new oil” is tied up with somebody else.

Regarding the second point however - developer resources - it doesn’t have to be this way. And this brings me to the crux of my article - APIs & Data Accessibility.

APIs and the 9 essential features for data accessibility

At SyncHub, all we do all day is extract data from cloud platforms. Literally, all day - like, millions of records every day. Because we integrate with so many different cloud services - no two the same - we have come across a multitude of factors which we believe define data as “accessible”, and an even greater number of approaches to serving them.

So, without further ado, here are the essential features that any modern API needs to support if they want their data to be truly accessible:

Let’s explore each in more detail…

More a video person? We have a walkthrough on modification filters on YouTube.

Extracting data can be broken down into two main functions - backdating historical data, and then keeping it up to date in real-time. If your data set is small, you can simply set a timer (ie. every five minutes), then query for all the data and merge your changes into your local copy each time. But in most cases, this is not practical. Consider a large ERP that had 100,000 customers in its database. The processing power to pull this information down, likely hold it in memory, then loop over it to detect changes is unrealistic. Not to mention the pressure it would put on the cloud service as you sucked the guts out of their system…every five minutes.

Date modification filters (DMFs)

No, the best way to query data is to query it smaller chunks. This reduces the burden on both systems, and also creates resilience (consider, if the cloud service went offline as you were processing the 99,999th record - doh!). And the best way to break down these blocks is date modification filters.

DMFs consist of two parameters with which you query a list of API objects:

modified since - return all records which have been modified since this date

modified until - return all records which were modified before this date

So, a typical loop for SyncHub may be to set a date range of say, 24 hours, then query for blocks of data one-day at a time. Some systems can be quite “peaky” with their data (I’m looking at you cafes, with your lunchtime rush). In this case, no problem - your Data Sucker 2000 (TM) can smartly adjust its date range filter accordingly - perhaps for example reducing the window to 15 minute blocks over busier periods.

IF-MODIFIED-SINCE headers

Yeah - thanks but no thanks. This is great once you’re up to the real-time portion of your data management, but are almost useless if you are trying to back-load historical data (unless you are lucky enough to be able to employ our sorting/paging trick - see below).

Nope, without a modified until filter as well, you’re not going to get 100% on my Data Accessibility scorecard.

Versioning

A close second to DMFs is version-numbering. I’m told that Twitter was one of the early adopters of this method. Version-numbering involves the cloud service recording a unique number against every record in its database, and incrementing that number every time the entity changes. Thus if we take a Customer domain as an example:

| Action | Version |

| Customer A created | 1 |

| Customer B created | 2 |

| Customer A updated | 3 |

| Customer A updated again | 4 |

| Customer B updated | 5 |

| Customer A deleted | 6 |

| And so on... |

Some services choose to use a global version number, meaning that no two entities across any two domains will ever share the version number. However for our purposes, retaining a unique number across a single domain (i.e. Customer in our example) is sufficient.

From the consumer’s side, instead of asking for “everything between these two dates”, we ask for “everything between these two version numbers”. However, there are a couple of hurdles which makes this slightly harder to work with, in my opinion:

you have to record the last version number range that you queried. Yes, when using DMFs you have to record the date-range, but recording date ranges is a little more natural to me

requesting up until a maximum version number is pretty weird, because (at least when updating historical data), you have no idea what a reasonable “next” version number might be. Most times, the only reasonable method is to simply add X to the (previously stored) minimum version. However, there is a lot of variance in the results returned here. For example, if you add +1000 to the minimum version, do you end up with 1000 new records? Or one record that was modified 1000 times?

version numbers are abstract - if a customer asks us to re-sync data from March 2020, we have no idea what version numbers will encompass this. Our best solution is some kind of bi-splitting algorithm to quickly zero in on the range, but this is costly in terms of time and API calls. (Actually, to be fair, at SH we have another trick - we store the version number against the modification timestamp for each record, so we can actually do a lookup in our existing data and quickly find the version range. But not everybody has that luxury)

Unfortunately, while I can list the drawbacks of version-numbering, I’m unable to list any benefits. I honestly do not know why a team would choose to filter their data in this way, unless they are simply piggy-backing off of some other distributed GUID/locking system which they may already be using (e.g. for micro services and eventual consistency).

More a video person? We have a video on paging on YouTube

Another crucial component when pulling down large quantities of data, is paging. Where DMFs are about splitting your data across queries, paging is about splitting your data within a single query. Consider, if your query results in 200,000 records being returned, your system would likely:

call the API

wait while the cloud presented the data

wait while the data was transferred

wait while you deserialized the data

wait while you process/store the data

This process might take literally hours to complete. And, if there is a single interruption at any point, you have likely lost the entire block of data and have to start again.

Paging mitigates this issue by breaking the result into smaller chunks (“pages”) and returning them one at a time - say, 1,000 pages each with 200 records. This means that any interruption will lose you only 200 records of data, plus it gives both systems time to “breathe” between requests - keeping the servers free-flowing for other consumers.

Many cloud services enforce a page-size limit of their own, and that’s fine (except for one of our connectors - not naming any names - where the page size is only 25 records), but personally I like to have an option as a developer and usually set the page size to between 100-200 records, depending on what we’re doing with it.

There are a few ways to skin this paging cat, so let’s examine the most popular…

Paging via query parameters

The most basic (and most common) paging method we encounter is simple enough, essentially just appending a pageNumber and (optionally) pageSize variable to your request payload. For example, if the required dataset consisted of 5 records…

| ID | Name |

|---|---|

| 1 | Jenny |

| 2 | Bruce |

| 3 | Larry |

| 4 | Francesca |

| 5 | Boden |

…and the page size was two records at a time, then we would get all our data with three requests:

| Request | Response |

|---|---|

| /Person/List?pageNumber=1&pageSize=2 | (Jenny, Bruce) |

| /Person/List?pageNumber=2&pageSize=2 | (Larry, Francesca) |

| /Person/List?pageNumber=3&pageSize=2 | (Boden) |

Easy enough, right? The only caveat is the final page. In this case, you know that the page size is 2, and the final result (Boden) is just one record, therefore there can be no more records. However, what if there were six records? The only way that you know there is no more data, is by calling the API a final time and confirming that there are zero results. Not the end of the world - but still an unnecessary overhead as we’ll see below.

Paging via meta properties

The next_url(or by any other name) method works by appending meta-data to the payload returned by your first call. Whereas a non-paged record set may return just the requested data…

{

data:[

{

PersonID: 1,

FirstName: "Jenny"

},

{

PersonID: 2,

FirstName: "Bruce"

}

]

}

…a next_url payload will annotate the payload with meta-data concerning subsequent records:

{

meta:{

total_records: 5,

page_size: 2,

page_number: 1,

next_url: 'https://api.myplatform.com/ref=kkajaueh78UY'

},

data:[

{

PersonID: 1,

FirstName: "Jenny"

},

{

PersonID: 2,

FirstName: "Bruce"

}

]

}

Note, of course, the presence of the meta property, which encapsulates information about the current paging state. Using this method, the developer does not have to track and increment a “page_number” variable, they simply make a subsequent call to the URL provided by the next_url property, which encodes the information need by the cloud service to return the next set of paged data.

Quick aside: Many systems that offer this type of meta-data will include additional properties concerning total records, page size and page number. I’ve always found this strange. First, although “total records” is interesting, I’ve never actually found it useful - but clearly to provide this, the request did a “double hop” (once to get the data, once to count the pages), which is a waste of resources/time. Second and third - thanks, but…why? I already know the page size and page number because I told you what they were when I requested the resources.

There are two advantages to this method, and it is why it wins in my book:

the developer does not have to retain the “state” of the loop (e.g. the page number)

there is no silly “empty data set” check required to complete the paging loop - the absence of a next_url parameter is enough to indicate that it’s time to stop. Quite simply, this saves an additional API call (and every bit counts)

More a video person? We have a walkthrough on data structures on YouTube.

This one is a little hard to articulate, but I will try my best. But first, let’s stop for a cold bear.

Ah… that’s better.

So, where was I - ah yes, nested data. You must remember that most APIs are not developed for my new Data Accessibility philosophy in mind. Instead, they tend to be geared towards either:

creating data (e.g. creating an order in a POS system, or an invoice in an Accounting system); or

retrieval of specific data records

It is this second “feature” which brings about one of the least-helpful “helpful” features of most the APIs we deal with - nested data. Let’s consider things from the cloud service’s point of view first though, because it is perfectly reasonable.

Meet Jim

Meet Jim - say hi Jim.

“Hi, I’m Jim”

Jim is the lead architect for a hot new SaaS accounting platform. He works very hard - see how gnarled his hands are - that’s what happens when you cut code 10 hours a day.

Funny story: Jim got the job because in the interview they asked him how he could become a 10x developer. And Jim said “what, a 2x developer??!!” Classic Jim, right?

The first job he is assigned requires reducing the pressure that third-party developers are putting on the system via their API calls. He pulls up the logs and finds that the top requested endpoints for the last 30 days are:

| Endpoint | # calls | Duration (ms) |

| /invoice/{id} | 56,780 | 809,788 |

| /invoice/{id}/items/{itemid} | 140,122 | 1,706,641 |

| /customer/{id} | 51,182 | 733,343 |

It’s pretty clear from this that customers are querying for invoice headers then going back and making subsequent calls to retrieve invoice items and customer details for each invoice.

So, Jim decides to nest his data. Now, when people query the invoice endpoint, they also get a breakdown of items and customer. Next month, his stats look like this:

| Endpoint | # calls | Duration (ms) |

| /invoice/{id} | 55,283 | 2,303,112 |

| /invoice/{id}/items/{itemid} | 5,122 | 51,012 |

| /customer/{id} | 1,182 | 10,322 |

If we take the number of milliseconds as vaguely analogous to the pressure on the system, then Jim has reduced the stress by almost 30%. Boom! Take a bow Jim:

“Ah, shucks”

And remember - especially in these days of cloud computing - less server usage is literally less cost for the business. Plus - extra win - the third party developers are happy because they need to make only one call to get full invoice details - one call, one JSON parse, one atomic unit….etc etc.

But…I’m not happy

Nested data is tough for Data Accessibility because it introduces an extra layer of interdependence that quite frankly is unnecessary. Better from a DA point of view to have each endpoint completely isolated from the others - more like the architecture that Jim initially inherited. Why? Well, consider these points:

data requests are smaller and more granular, so if there is a problem with a particular record, it is far easier to isolate, retry, skip, etc…

we have the freedom to choose exactly which data we want - and when. Perhaps we don’t need customer information at all - so why on earth would it be delivered when we are querying for invoices? Or, maybe we do want it, but it is lower priority - better to get the invoice info first, and the customer info afterwards

most often, the destination that the third-party developer is pulling the information in to is not nested (consider, for example, a relational database). In these cases, the developer has got to unravel the received entity in order to store it in their own data store. This often involves creating additional child/parent foreign keys as well, which are usually omitted/inferred with nested responses and thus require additional developer-smarts

Here’s the thing though: Jim was right in making his decision. It saved his company money and made life easier for (most of) their third-party developers.

So, I’m absolutely not saying that Jim’s new architecture is wrong. But, to be a truly Data Accessible platform, an API needs to serve both use cases.

Oh, data

(That was a pun - I went to write ODATA, and….well….here we are)

So yeah, ODATA is actually an ideal solution for Data Accessibility. It lets callers specify exactly which fields they want, in exactly what order, within exactly which filters.

The ODATA endpoints that we connect to with SyncHub are by far the easiest. But, I admit, I haven’t taken the time to learn how to actually implement an ODATA API myself. My guess is that at its most basic, it simply maps queries to SQL statements. But I can’t see how it could (easily) work with anything but all-or-nothing permissions architectures. Plus, it must wreck havoc on your indexing. So, I’m not advocating ODATA for every API - I wouldn’t do it myself - but it is certainly a quick option and perhaps an ideal auxiliary API for some platforms.

More a video person? We have a video walkthrough here.

Thus far, we have only considered how we use an API in terms of checking for modified records, and then getting those records in bulk.

However, equally important to Data Accessibility is being able to retrieve a single record at a time. In an ideal scenario, this is almost never required as the pages of modified data are sufficient to describe all the data we need, when we need it. Certainly, with SyncHub, this accounts for over 99% of all data retrieval operations we do.

However, there are times when we may want to get items one-by-one. And unfortunately this seemingly innocuous requirement can cause far more than 1% of the headaches when connecting with a cloud platform.

Header retrieval

In SyncHub we have a mode which we use against fragile APIs and thus where more resilience is needed. Here, our modification checks consist of slightly modified steps:

get the lists of modified records (as per our paging and modification mechanisms, described above); then

store the headers of the modified records, making each as “‘modified” and thus requiring a re-sync; then

iterate over our list of modified headers, retrieving the single record one-by-one

This increases the pressure on the API and our system by a factor of approximately 100x *, so we do not use it lightly. However it is necessary in some situations. And you can see how in this example, it is necessary to have an accurate mechanism for arbitrary single-item retrieval.

* yes, 100x: Consider - if an endpoint serves data in page sizes of 100 records (100 is pretty typical), then you get 100 records for every one API call. But, if you need every record one at a time….well, that’s 101 records (the initial call, plus one for each record) **

** Ouch

Summary headers

This pet-hate of mine ties in with our discussion earlier about nested records, but it equally applies to flat-record retrieval as well. Consider, for example, Wrike and their Task endpoint. Here is what we get when we query the /tasks endpoint for modified records:

{

"id": "[hidden]",

"accountId": "[hidden]",

"title": "How-To Guide: Personal Space",

"status": "Active",

"importance": "Normal",

"createdDate": "2019-09-16T00:50:31Z",

"updatedDate": "2019-09-16T00:50:31Z",

"dates": {

"type": "Backlog"

},

"scope": "WsTask",

"customStatusId": "[hidden]",

"permalink": "https://www.wrike.com/open.htm?id=[hidden]",

"priority": "2236d4008000000000005c00"

}

…and now look at the additional info we get if we request the /task (singular) endpoint for the same task:

{

"id": "[hidden]",

"accountId": "[hidden]",

"title": "How-To Guide: Personal Space",

"description": "<h3><b>What Is a Personal Space?</b></h3>It's a private part of the...<br />",

"briefDescription": "What Is a Personal Space?It's a private part of the workspace for your eyes only. You can keep your personal to-do lists,...",

"parentIds": [

"[hidden]"

],

"superParentIds": [],

"sharedIds": [

"[hidden]"

],

"responsibleIds": [],

"status": "Active",

"importance": "Normal",

"createdDate": "2019-09-16T00:50:31Z",

"updatedDate": "2019-09-16T00:50:31Z",

"dates": {

"type": "Backlog"

},

"scope": "WsTask",

"authorIds": [

"[hidden]"

],

"customStatusId": "[hidden]",

"hasAttachments": true,

"permalink": "https://www.wrike.com/open.htm?id=[hidden]",

"priority": "2236d4008000000000005c00",

"followedByMe": true,

"followerIds": [

"[hidden]"

],

"superTaskIds": [],

"subTaskIds": [],

"dependencyIds": [],

"metadata": []

}

As you can see, there is significantly more information - dare I say critical information, such as the full text of the description.

In these cases, no matter how good the paging/modification queries are, if we want every scrap of information then we are obliged to call back to the API for every item we download. This most certainly is not “accessible”.

Now again, just like Jim’s prior optimization around nested entities, this is likely done to reduce bandwidth and overall server stress for the cloud service. In fact, with a company as big as Wrike, they probably went to lengths to find the optimal balance between the data they deliver in the summary vs the data delivered in the full payload. So, I am not saying that this is a bad or wrong decision. However, for the purposes of Data Accessibility, it is counter-productive.

Soft-deletes are a concept used in the database community and their presence (or absence) in an API is almost certainly a direct reflection of the decisions made at the database layer during app development.

Simply put, a soft-delete does not actually delete a record from the database. Instead, the record is simply marked as deleted. This actually creates quite a lot more work for both database designers and the programmers that query that database:

the mark is usually done by adding an additional column to the database table. Something like IsDeleted, IsArchived, IsActive, WhenDeleted etc etc

every time the data is queried, the programmer needs to append to the WHERE clause something akin to “…

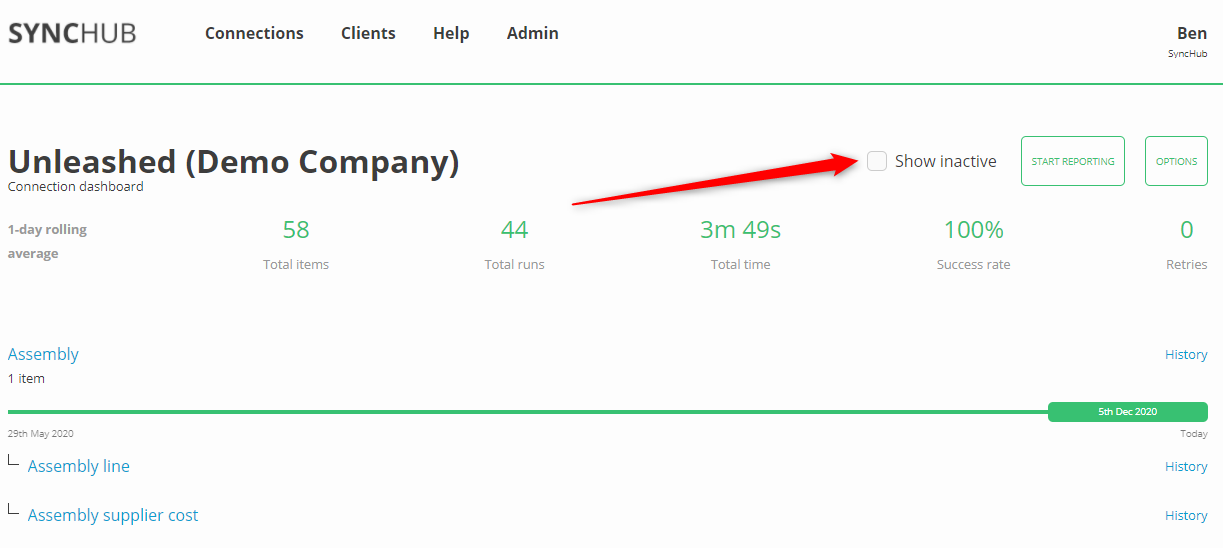

and where IsActive = TRUE”and in many cases, this additional filter can propagate all the way up to the UI, resulting in those checkboxes you sometimes see in your apps saying “Include archived” etc

Why on earth would a team decide on introducing this vastly more complicated construct in the data store? Well, a couple of reasons:

data is valuable and storage costs on a per-record basis are virtually negligible these days. Who knows when or if you might need this deleted record years down the track?

historical reporting (we’ll elaborate more below)

foreign key integrity. I won’t get into this here, but it is fundamental to relational database design and if you’re nerdy enough to be reading an article on Data Accessibility then you probably already know what this means

(A digression on historical reporting)

Imagine that you are a BI analyst tasked with creating a report which compared Sales by Product. Your SQL query may look something like this:

select Product.Name, sum(Order.Total) as Total from Order inner join Product on Order.ProductID = Product.ProductID group by Product.Name

In the first month, the report for February shows totals as follows:

Sales by Product

Giving us a total of $12,800 worth of sales - not too bad for a computer repair store.

Now, once winter comes to an end, the store ceases to sell apples and thus removes the product from their database. If the management team returns to look at historical sales, they’ll see a different report for February:

Sales by Product

It’s obvious what has happened here. The sales didn’t disappear, but because the product information in the database is no longer available, the inner join in the report query failed to account for these historical sales. At best, you could update the query to a left join, but that would still leave you with a single “Unknown product” category on your chart (which, by the way, was the aggregate of all deleted products, not just apples).

This example is simple but adequately indicates why database designers go to so much effort to implement soft-deletes in their data stores. So, now that I have definitely convinced you of its merits…let’s move on.

Soft deletes + APIs

As I said above, implementation of a soft-delete will often propagate all the way up to the UI level, and the same can be said for APIs. An API in this scenario must consider that while most people do not want information on deleted items, that information must still be available somehow. Let’s quickly examine a couple of ways that this can manifest:

IsActive entity flags

As a baseline, entities returned from these APIs must now have an additional property analogous to the soft-delete property used in the database - WhenDeleted, IsActive etc.

When the consumer of the API receives these entities it is their decision to filter the records by this flag or not. Obviously at SyncHub we retain these entities - but we mark them as deleted in our data warehouse (to facilitate the historical reporting example described earlier).

Deletion filters

Further to the above (or very occasionally in lieu of), many APIs provide optional filters which allow consumers to specifically include/exclude deleted records. This is usually something in the form of “includeInactive=TRUE” in the API call. Usually when this option is present then the default is to exclude the deleted records, which I think is fair enough.

On balance though, I prefer deleted records to be included by default - simply because it is easy for a developer to overlook this additional filter option and inadvertently omit a (sometimes) large amount of information from their queries.

A workaround to hard-deletes

There is one little nuance which lets us actually work around APIs which permanently delete their records. You see, even if the cloud platform no longer has a record of the deleted item, we do. And this is because we have pulled it down earlier into our data warehouse.

As long as the data sync was made before the record was deleted, our clients will have a permanent record of these items and their historical reports still work as expected.

Further to this, we know that the record is deleted because the API will now return a 400 or similar error when we try to request it. Thus, we can mark our copy of the record as being deleted (i.e. a “soft-delete”) and thus allow our users to differentiate between active/inactive products just as if the original API soft-deleted the record in the first place.

Cool huh?

The caveat here is that your data warehouse needs to begin populating data before data is removed, which is why it is critical to pull all information down as soon as you can - even if you don’t need it at the time.

If you want more details on exactly how we do this at SyncHub, we’ve got a separate article on Deletion Management here.

With the notable exception of Deputy, every API I’ve dealt with has some kind of what we call “throttling“, meaning that they allow a maximum of X API calls per period. For example, typical limits are:

150 calls/minute

2 calls/second

5000 calls/day

Note that most APIs use a combination - X calls per minute, up to a maximum of Y calls per day.

Limits are good

Imagine that you desperately needed data from your platform, but it was locked up because some rascal on the other side of the world was running a scraper or bot that was hammering the API at 100,000 requests/second.

Now, most cloud services are hosted on a shared infrastructure (servers, databases, web apps…), so this increase in resource utilization by one party (rascal) means less availability for everybody else.

If only there was some way that this person (rascal) was limited to a maximum number of requests per minute, so that everybody else could use the service as well. Well there is, and we call it throttling. The vast majority of cloud services employ it, and it is a requirement for our Data Accessibility checklist.

API throttling is kind of like paid parking - it’s a pain when you have to play by the rules, but if nobody did then it would be chaos.

In many cases, APIs can vary limits at their discretion. For example, SyncHub has higher rate limits for approximately half of our connectors - because we have shown ourselves to be conscientious and respectful with the API, and we have a valid use case for increased limits. (Presumably also, it is because our developers are very charming.)

However even with our higher allowance, we can sometimes take weeks to onboard historical data. Consider this example of a recent customer using Vend:

| API limit | 1500 / minute |

| Age of company (i.e. years to backdate) | 10 years |

| Total orders | 17.5 million |

| Total other (e.g. customers, products) | 1.5 million |

| 19 million records | |

| Time to backdate | = 19m / 1500 |

| = 12,666 minutes | |

| = 8.79 days to sync | |

And that assumes there is no server downtime at either end and everything basically runs perfectly.

Handling limits

Once an API limit has been reached, the API needs to advise the calling platform as such. This is most typically done by throwing a 429 Too Many Requests HTTP error code. A developer can detect this error and then wait an appropriate amount of time before their limit is lifted and they may re-query again.

What is an “appropriate” amount of time, you ask? Well, along with the 429 code, a good API will also provide an indication of when the restrictions will be lifted. This is done in a variety of ways, depending on the whims of the API in question. The two most common we have found are the RETRY-AFTER and X-RATELIMIT-RESET headers:

The RETRY-AFTER header

This provides an integer value representing the number of seconds from “now” until the limit will be lifted. The developer simply has to pause their sync for this period of time:

var seconds = request.Headers["Retry-After"]; this.PauseUntil = DateTime.Now.AddSeconds(seconds);

The RETRY-AFTER header is endorsed on the MDN site, so I’m inclined to think it’s quasi-official.

The X-RATELIMIT-RESET header

This works in a fashion similar to the RETRY-AFTER header, however the value is relative to UNIX epoch time.

Custom headers and properties

As with most things API-related, the lack of an enforced standard means that cloud services are allowed to define their rate-limit responses however they like. At SyncHub, we’ve seen variance in both header names and date/second format, and no convention seems to be emerging as the leader.

Oh, there is one exception though. We’ve seen some APIs return with….nothing at all. That’s right, no indication whatsoever of the time when the throttling will be lifted. In these cases, we wait thirty minutes before trying again. It is regrettable, not because I worry about overburdening their system (or ours), but because we can give no indication to our users about what is happening. Compare this with the specific date provided by the methods aforementioned, and you can see why we cringe when these 429s reveal themselves.

Showing limit progress

Of course, pausing your calls after you’ve received your 429 notice has a couple of drawbacks. First and most obviously, it is a redundant call - wasting the resources of both your system and the cloud service.

But there is another, more subtle reason to proactively avoid 429s, and that is paging. Consider this:

you make a call for all sales orders made in February. For architectural reasons, your GetSales() call is atomic, meaning that it needs an all-or-nothing response

the cloud service returns results in pages of 100 records, and you’ve made 90,000 sales that month (this figure isn’t ridiculous by the way, plenty of our franchise customers are running 3,000+ sales/day across just a handful of cafes)

this means you’ll need 900 consecutive API calls in order to get your orders, but you’ve already used 4,500 of your 5,000/day allotment…

…and thus your call fails after the 500th page

Oh dear. All that previous work and activity has gone to waste. If only there was a way to know beforehand whether you had the resources left to make the call. Well, this is where our other throttling-related headers come in to play:

| Header | Description |

|---|---|

| X-RateLimit-Limit | The total number of calls you can make within the current period |

| X-RateLimit-Remaining | The remaining number of calls you have in the current period |

| X-RateLimit-Reset | The number of seconds remaining before the current period ends (and thus your X-RateLimit-Remaining value resets to the X-RateLimit-Limit) |

Aside: As with the 429 retry-after data, these figures can come down in forms other than response-headers. But, in general, the data remains the same.

Now, using these values, you can monitor your API limits in real-time and make smarter decisions about querying large blocks of data. Cool huh?

In summary, responsible and clearly-described API throttling should be a first-class citizen when building a highly accessible cloud service. Without this, you will not get full marks on our Data Accessibility scorecard.

Quite honestly, I’ve never had a use case where I care about the order that my data comes down. Most generally, I need all of the information within a given filter, and I store it immediately in my own data store. From there, I can order/sort however I want - depending on the scenario.

However, I do appreciate some use cases where it may be useful for sorting to be applied by the cloud service before returning results:

your API data is rendered directly to the user. For example a javascript-powered site may list customers ordered by name, paging through results when required (e.g. when a human literally clicks the “next page” button). In these cases, the developer does not have the luxury of pre-loading all the data in their interim data store first, and thus cannot “sort”

actually, that’s the only example I can think of but I’d already done two bullet points so I had to fill this space

A quick aside on performance. If an API allows data to be sorted by any arbitrary field, this tells me something about the back-end driving the cloud service. Either they’re using using a modern NOSQL datastore (which often index everything by default); or - if they are using an old-fashioned relational database (which most of us are) - they must be indexing the **** out of it….right?

A welcome side-effect

Having said that, there is one side-effect of sorting that is critical to the performance of some our connectors at SyncHub, and the reason I have included it in my Data Accessibility list. Consider, if you will, an endpoint that returns sales orders. The endpoint offers the following features:

a modified-from header

paging

These are both ticks in the Data Accessibility checklist, but there is one thing missing which prevents us from giving it full marks. And that is a modified-until header.

As I mentioned in our section on date modification filters, a modified-from header is only useful for retrieving (near) real-time data. If you are back-loading historical data, it is pretty useless. Unless, that is, you can combine it with some smart sorting and paging.

Consider the following results returned by our fictional API, where we want orders between the two hours of 9am and 11am, on 1st Feb 1979:

// /sales?from="1979-02-01T09:00:00Z&pageSize=4&page=1" [ { saleID: 189, total: 19.58, modified: "1979-02-01T09:00:56Z" }, { saleID: 200, total: 11.51, modified: "1979-02-01T10:30:00Z" }, { saleID: 5698, total: 119.00, modified: "1979-02-01T14:15:00Z" // YIKES!!! }, { saleID: 618, total: 1.10, modified: "1979-02-01T10:45:00Z" } ] // Now, get our next page: // /sales?from="1979-02-01T09:00:00Z&pageSize=4&page=2" [ etc etc ]

Yikes - what is that third record doing there? Well, remember that the API does not accept a modified-until filter and thus our call is getting everything after 1st Feb 1979 at 9am.

Note also that this is also only the first page - there could be hundreds or thousands (or millions!) of records that occurred since 1979. Even though we only want a two-hour period, we are forced to get everything because (for all we know) there might be a relevant match in the last page.

But now, what if we could order by modification date? Here is our (re-ordered) result from our query:

// /sales?from="1979-02-01T09:00:00Z&pageSize=4&page=1" [ { saleID: 189, total: 19.58, modified: "1979-02-01T09:00:56Z" }, { saleID: 200, total: 11.51, modified: "1979-02-01T10:30:00Z" }, { saleID: 618, total: 1.10, modified: "1979-02-01T10:45:00Z" }, { saleID: 5698, total: 119.00, modified: "1979-02-01T14:15:00Z" // YAY!!! } ] // Do we have a record whose modification date is greater than 11am? If so, stop paging...otherwise continue below: // /sales?from="1979-02-01T09:00:00Z&pageSize=4&page=2" [ etc etc ]

This time, you’ll note that the out-of-range date is last in the result set (obviously). Furthermore, because I know the records are ordered by modification-date, I know that all subsequent pages will have dates greater than this. Thus, they will fall outside my range and I don’t need to even bother requesting the pages!

Suddenly, I’ve increased my efficiency to become almost as efficient as “proper” from-until filters! I will get at most [page size] additional entities, but these can be easily truncated in memory after the fact. In terms of API usage, there is a 1/[page size] chance that I’ll make a additional redundant call at the end (think about it), but that is barely a blip in the scheme of things.

Look how many explanation points I put in that prior section. This excites me.

The real world

So, how often can this hack be applied? Well, remember that our ideal scenario is having from and until filters in the first place. But (at SyncHub), of those that only have a from filter, approx 1/3 can be salvaged with our sorting hack. Here’s a breakdown (mostly because I wanted to try out the Pie Chart feature in SquareSpace):

So, in the scheme of things, perhaps 10% of all queries can be salvaged. This looks small on a pie chart, but is massive in terms of API calls when you’re dealing with throughput at SyncHub’s level. Reduction in API calls means less processing power on both our servers and the cloud services’. This in turn means less dollars spent, and yes - less impact on the environment, if you’re keeping score.

In summary, I don’t consider ordering/sorting to be a first-class requirement of a truly Data Accessible API. However, if an API does not meet their date-modification requirements then this can be their get-of-jail-free card.

Ah, dates and timezones - my old nemesis, we meet again. I wrote this blog post a few years ago on just this subject, but apparently not all API developers have read it. I won’t repeat myself here on the vagaries of date storage and comparison, so let me cut to the chase:

Store. Your. *******. Dates. In. UTC…….Please.

It amazes me how many “modern” APIs still require you to query the API in the timezone which the data was created in. SyncHub’s server has been set to GMT time. If we want to query data in Australia, in their own time zone, we have to consider the following:

add 7 hours if they are in Perth….

…but 10 hours if they are in Sydney…

…unless it is currently daylight savings - and the period we are querying is not in daylight savings time - in which case we need to add or subtract an hour…

…unless of course the period we are querying covers a DST boundary, in which case one of the dates is subtracted an hour, but the other remains the same…

Actually, I’ve purposefully over-complicated this. In truth, what we do in actuality is capture the timezone of the source client (e.g. Pacific/Auckland), then use our built-in programming tools to offset from the current (GMT) date when making our queries (this takes care of the DST issues too). I’m sorry, you’ve hit a sore spot with dates.

However, this simple answer assumes that we actually know the timezone of our client’s system. In our experience, approximately half of APIs offer this programmatically, which is fantastic - after authorizing the connection, we make a quick call to get the timezone, store it alongside the other connection meta-data, then use it to offset any date-related queries going forward.

But in the other half, our only option is to ask the human what their timezone is. And how many humans actually know this? It’s a lousy question to ask and interrupts the on-boarding experience for our users.

And don’t even get me started on our large franchise customers. In these cases, their stores are often spread across the country or even the world - each in a different timezone. How would you go about querying for aggregate sales within a single time period? (Answer: you can’t).

Introducing…Coordinated Universal Time

Coordinated Universal Time, or UTC (how come the acronym letters are in a different order….?), solves this, and programmers love it. Basically, instead of storing your time locally in your database (e.g. in Perth time, or New Zealand time, or London time), you do the offset to UTC immediately, and store that. Now, when we query for data, we don’t care what timezone it was created in. We just ask for data in terms of UTC time and everything comes out…well, perfectly.

The only drawback here is rendering the time back to the user. For example, if your shop is in Tokyo (UTC+9 hours) and you make a sale at midday, you don’t want your reports to say that it was made at 3am (i.e. nine hours earlier). Thus for the cloud-service developers, they need to make an additional offset every time they render the time to a human. But quite honestly, with modern software tools this is a trivial task and becomes habit very quickly. And it is a far-lesser evil that storing in local time.

Returning dates

The section (rant) above is in terms of querying data (e.g. getting orders between specific dates). However, the same problems occur when data is returned in local time. This is especially pertinent for SyncHub, where we aggregate data across multiple different cloud platforms. Without a common reference point for dates, then temporal reporting is at best useless and at worst misleading.

The only practical reference point is UTC, and this brings me to one of the only times SyncHub will modify your data - we automatically convert all relevant dates to UTC time, to facilitate just this scenario. You’re welcome.

In summary, if your API deals with local time in either the query filters or the returned data, then you do not get full marks in our Data Accessibility checklist.

And now, we come to the final aspect of my Data Accessibility checklist - pricing. Of all the cloud services that we connect to, approximately 36% will charge their customers additional fees in order to access their API. This means that you are effectively getting charged twice - once to put your data in to the cloud service (i.e. use the app), and the other to get your data back out.

Doesn’t seem right, does it? My instinct, in terms of Data Accessibility, is that this is borderline immoral. However, let’s take a minute to consider things from the cloud service’s perspective:

use of an API (especially by an ignorant or inexperienced developer) can result in unreasonably high stress on the server. This costs money, plain and simple

many cloud services have modular charging. If you consider API access as a “module”, then charging a fee for it is perfectly reasonable

services may offer a baseline limit on API access, but allow the user to purchase additional tiers (e.g. more request/minute or a higher daily total). This is a “user pays” system, fair and simple

So, I’m not going to boldly state that everybody should be able to get their data for free via the API, whenever they like. There are financial and equality issues to consider. Plus I clearly have a stake in the “free data access” game and I know I am not impartial.

However, objectively and with only the question of Data Accessibility in mind, it is clear that paywalls in front of your data clearly make your data less accessible and thus an API will lose points on the Data Accessibility checklist if it charges for API access.

Conclusion

Wow. Three months and 7,000 words later and I have finally come to the end of my post. It has been nice returning to this over the last few months, solidifying my thoughts on accessibility and being able to bring in real-world numbers from our real-world use cases. Some of these numbers actually surprised me when I ran them, so it has been a learning experience.

Developing an API can is ideally not an afterthought in your organisation, but it takes money and commitment to build it out alongside the other “front facing” features that are more likely to generate customers in the short term. However, even if you are not building out an API immediately, there are aspects of this article whose early implementation in your development will incur minimal marginal cost. For example, soft-deletes and UTC date storage have literally fundamental implications on how your API ultimately manifests.

I hope that readers of this article who are building out an API now might take on board some of these lessons from the perspective of us mere consumers. After all, we are customers of yours just as much as your regular app users. This is a financial decision as well - apps like Stripe, Xero, Pipedrive, and Shopify are famous for developing and nurturing their developer community - and the primary interface for this relationship is their API. The result of this relationship is ultimately better apps/plugins and happier end users. Or, more nerdily:

Good API development = happy developers = better apps = more customers.

Well, that’s how it seems to me anyway. Thanks for reading.

(BTW - this article is also featured on Medium)